Assess more, grade less

26 April 2023

You will likely be familiar with the terms “summative assessment” and “formative assessment”. There are a few tech tools available to create formative assessments -- or what we’ll call “knowledge checks".

While summative assessments are an assessment of knowledge (think: final exam), formative assessments are assessments for knowledge. Perhaps you’ve never considered using assessment as a tool to create knowledge, so before we get into The How, let’s briefly understand The Why.

Providing more assessments allows students to better gauge their progress throughout the semester. With frequent feedback from the instructor, students have the opportunity to apply the feedback and improve their performance. Particularly helpful are ungraded and/or low-stakes knowledge checks immediately following learning material. Frequent and formative low-stakes knowledge checks help students self-assess their understanding of content, and coupled with immediate and relevant feedback, result in one of the key utilities for learning as revealed by cognitive scientists (Boettcher and Conrad, 2010; Lahey, 2014; Simonson et al., 2015).

To make one point crystal clear: by more assessment, we do not mean more grading. Remember, frequent formative assessment often works best when it is ungraded and purely for self-assessment. In general: assess more, grade less.

Now on to The How. There are three primary tools at our disposal: the Quiz activity in Akoraka | Learn, H5P, and Panopto.

Quizzes in Akoraka | Learn

Most of us currently use the Quiz activity in Akoraka | Learn for midterm tests and final exams. We’d encourage less of that use, and more use for knowledge checks. This option differentiates itself from the other two options (H5P and Panopto) because you are providing the knowledge check separately from the learning material. For example, students complete the Module 1 readings, and then answer a few short questions you’ve created using the Quiz activity. This “Module 1 Knowledge Check” as it could be called, could be a mix of multiple choice and/or short answer questions. Critically, whatever the question type, students are receiving immediate feedback upon submission. Multiple choice questions are not only marked as correct/incorrect, but students are provided with explanations about why their answer is correct/incorrect – this is feedback pre-programmed into the quiz by you. Short answer questions cannot be auto-graded, but you can provide feedback in the form of a model answer and have students self-assess their proximity to your expert answer.

H5P and Panopto allow you to combine the knowledge check with the content – they become an integrated piece of learning material. Check out the examples below:

H5P Example (skip ahead to minute 24)

Panopto Example

Bonus Example

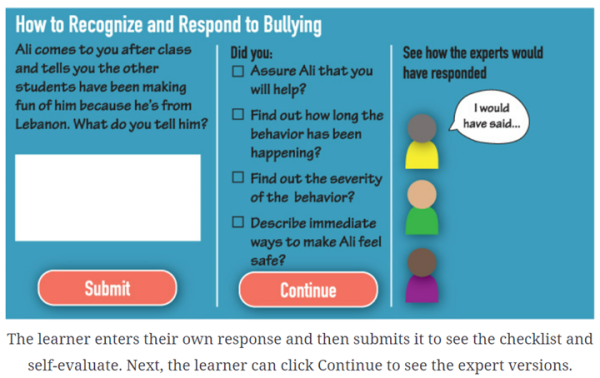

This final example isn’t using any specific technology but is a powerful example of formative assessments encouraging student-directed learning while also teaching students how to think like an expert.

Example of student-directed self-assessment with real-world relevant feedback. Source: Dirksen, J. (2016). Design for how people learn (2nd ed.). p.280

If you remain unconvinced, consider one final incentive: Research has shown a correlation between student cheating and courses with a small number of high-stakes assessments. A course with many lower-stake assessments, like we are suggesting, may provide less incentive to cheat.

Disincentivise cheating and improve student outcomes with more assessment and less grading. Register for our workshop on 3 May where we’ll go through the steps to create knowledge checks like the ones shown above.

References and extra notes

Boettcher, J.V. & Conrad, R. (2010). The online teaching survival guide: Simple and practical pedagogical tips. Jossey-Bass. p.126.

(“The quiz tools within [LMSs] are excellent for keeping students on track, increasing learning, and minimizing instructor time on grading… use [quizzes] for important core concepts, factual knowledge, and… provide a low point value for completion…”)

Lahey, J. (2014). Students should be tested more, not less: When done right, frequent testing helps people remember information longer. Retrieved from: https://www.theatlantic.com/education/archive/2014/01/students-should-be-tested-more-not-less/283195/

(“Henry L. Roediger III, a cognitive psychologist at Washington University… found, ‘Taking a test on material can have a greater positive effect on future retention of that material than spending an equivalent amount of time restudying the material.’ This remains true ‘even when performance on the test is far from perfect...’

Formative assessments are not meant to simply measure knowledge, but to expose gaps in knowledge at the time of the assessment so teachers may adjust future instruction accordingly. At the same time, students are alerted to these gaps, which allows them to shape their own efforts to learn the information they missed. Roediger asserts that educators should be using formative assessments early and often… to strengthen learning during the unit rather than waiting until the end and giving a summative assessment.

Continuous formative testing promotes the cognitive processes that have been shown to maximize long-term retention and retrieval. Frequent testing ‘not only measures knowledge, but changes it, often greatly improving retention of the tested knowledge,’ says Roediger.)

Simonson, M., Smaldino, S., and Zvacek, S.. (2015). Teaching and learning at a distance: Foundations of distance education (6th ed.). Information Age Publishing. p.240.

(“Ongoing assessment activities are woven into the fabric of the instructional process so that determining student progress does not necessarily represent to students a threat, a disciplinary function, or a necessary evil, but simply occurs as another thread within the seamless pattern of day-to-day classroom or training events… any misconceptions held by learners that might interfere with later progress are identified and addressed before they become obstacles to further learning... ‘The point is to monitor progress toward intended goals in a spirit of continuous improvement.’”)